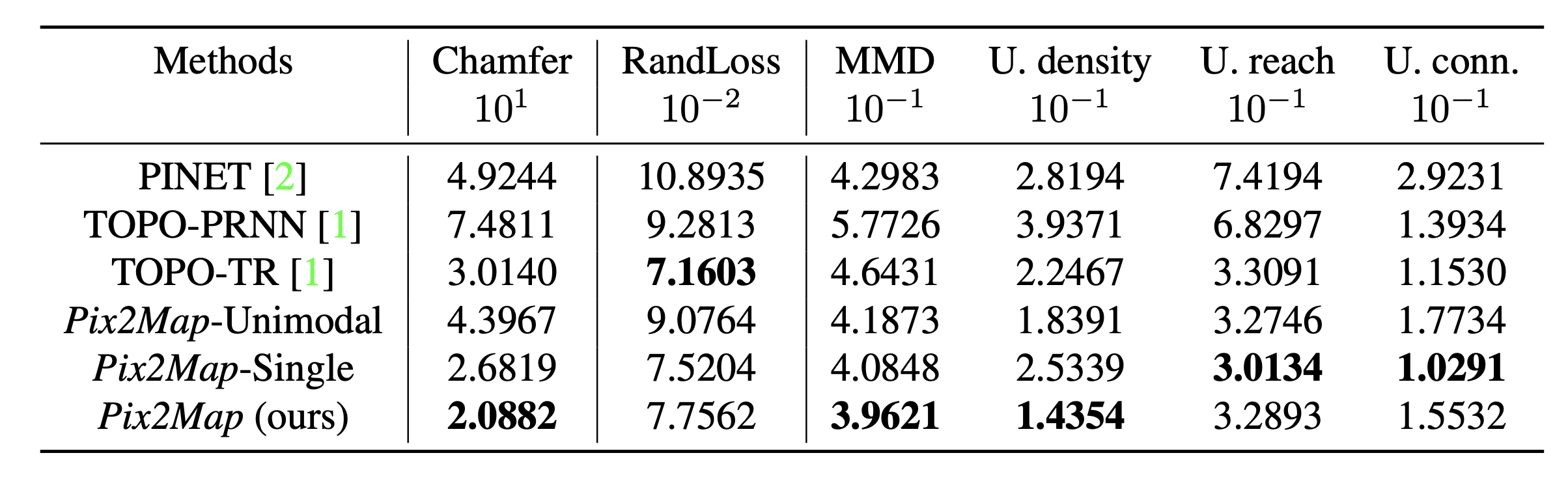

Baseline comparisons. For fair comparisons with the prior art

[1], in this experiment, we (i) train

Pix2Map using frontal \(50m \times 50m\) road-graphs (as opposed to our default setting of predicting the surrounding 40m x 40m area). Moreover, we (ii) train

Pix2Map with a single frontal view (

Pix2Map-Single) to ensure consistent comparisons to baselines. Importantly, even in this setting, our method still outperforms baselines by a large margin 2.6819 in terms of Chamfer distance, as compared to 3.0140 obtained by the closest competitor, TOPO-TR

[1].